Method

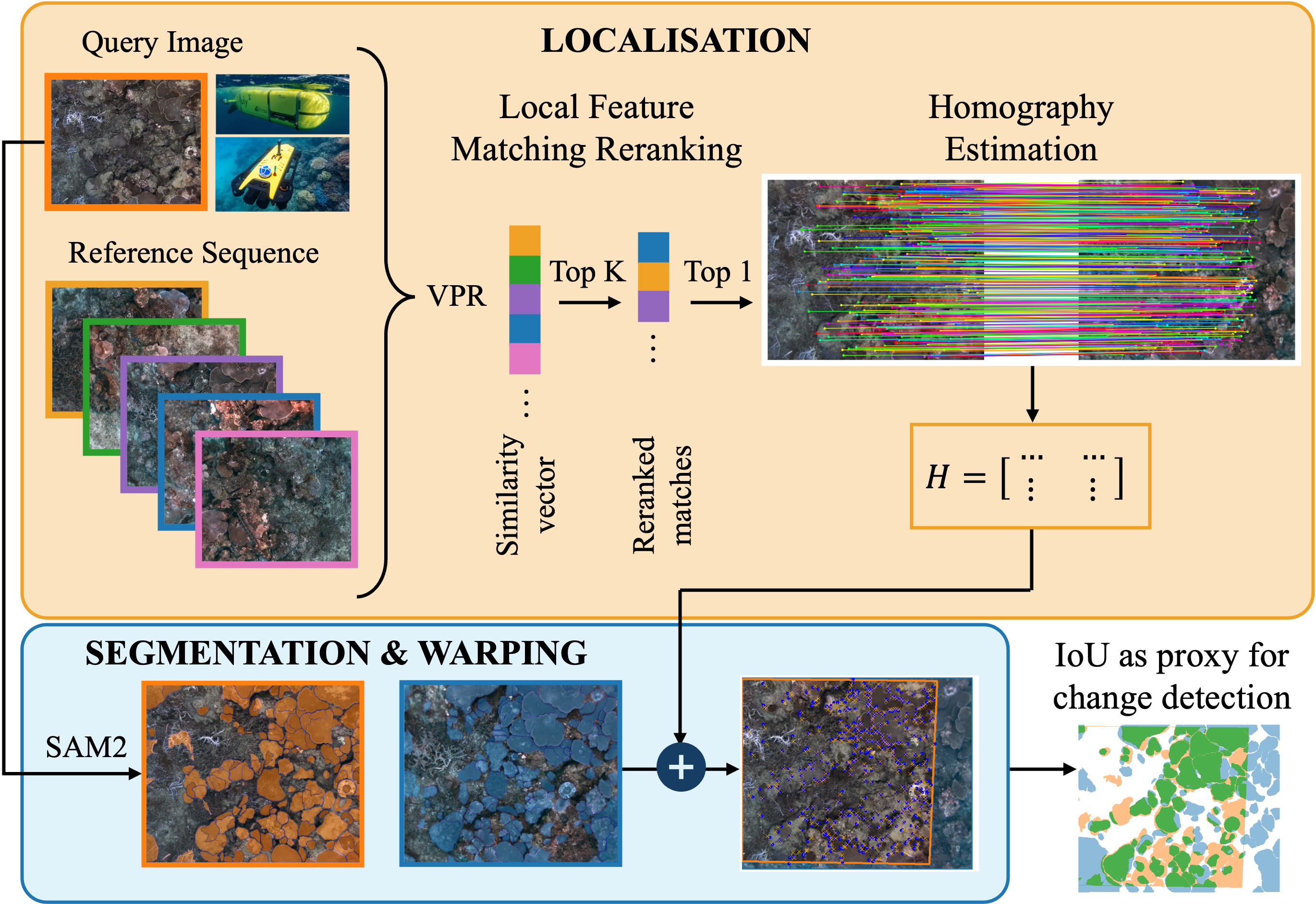

To enable reliable multi-year change detection, Underloc is an integrated pipeline that combines Visual Place Recognition (VPR), feature matching, and image segmentation on video-derived images. Using the state-of-the-art VPR method, MegaLoc, our hierarchical method takes the top K matched images per query and reranks these candidates using more computationally expensive local feature matching. We use LightGlue to establish keypoint correspondences between Superpoint features and compute the homography matrix for warping and aligning query-database matches. Using the inlier count, we rerank matched images and filter out those with reprojection errors greater than 10 pixels. To simulate a potential change detection method we automatically extract segmentation masks for each image using Segment-Anything 2 (SAM2). We then use the homography matrix to warp the masks into a common image space, enabling pixel-level comparison using intersection over union (IoU) as a similarity proxy.